instruments

installations

Both human hands and plant soil can be interpreted using capacative touch sensing, as electricity is conducted by both us and plants. earth and touch starts a conversation between humans and plants from the shared conductivity. The conversation is not in human language or plant language. Instead, it is mediated by the machine, in an unfamiliar language to both of us. This creates a level playing field to begin to communicate together.

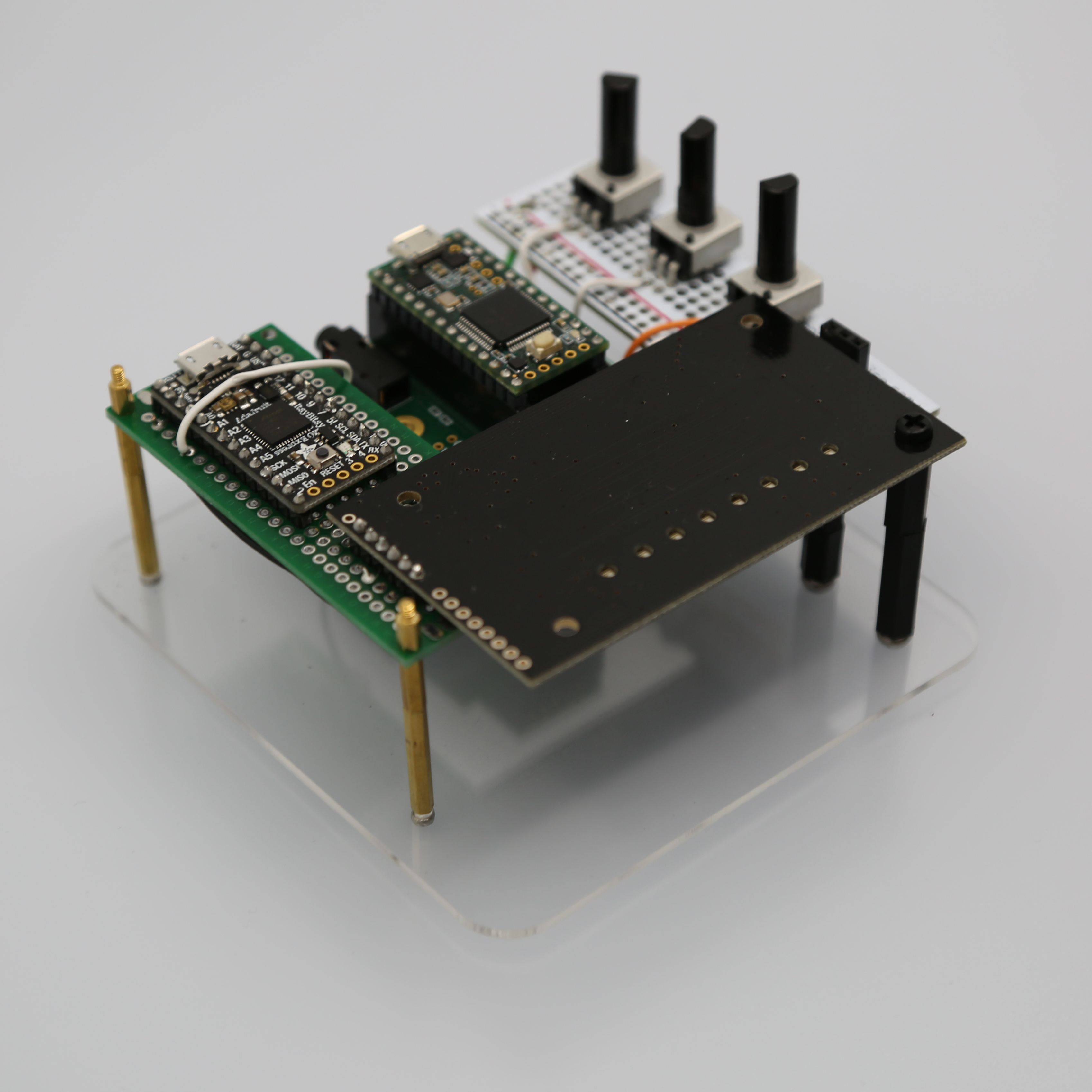

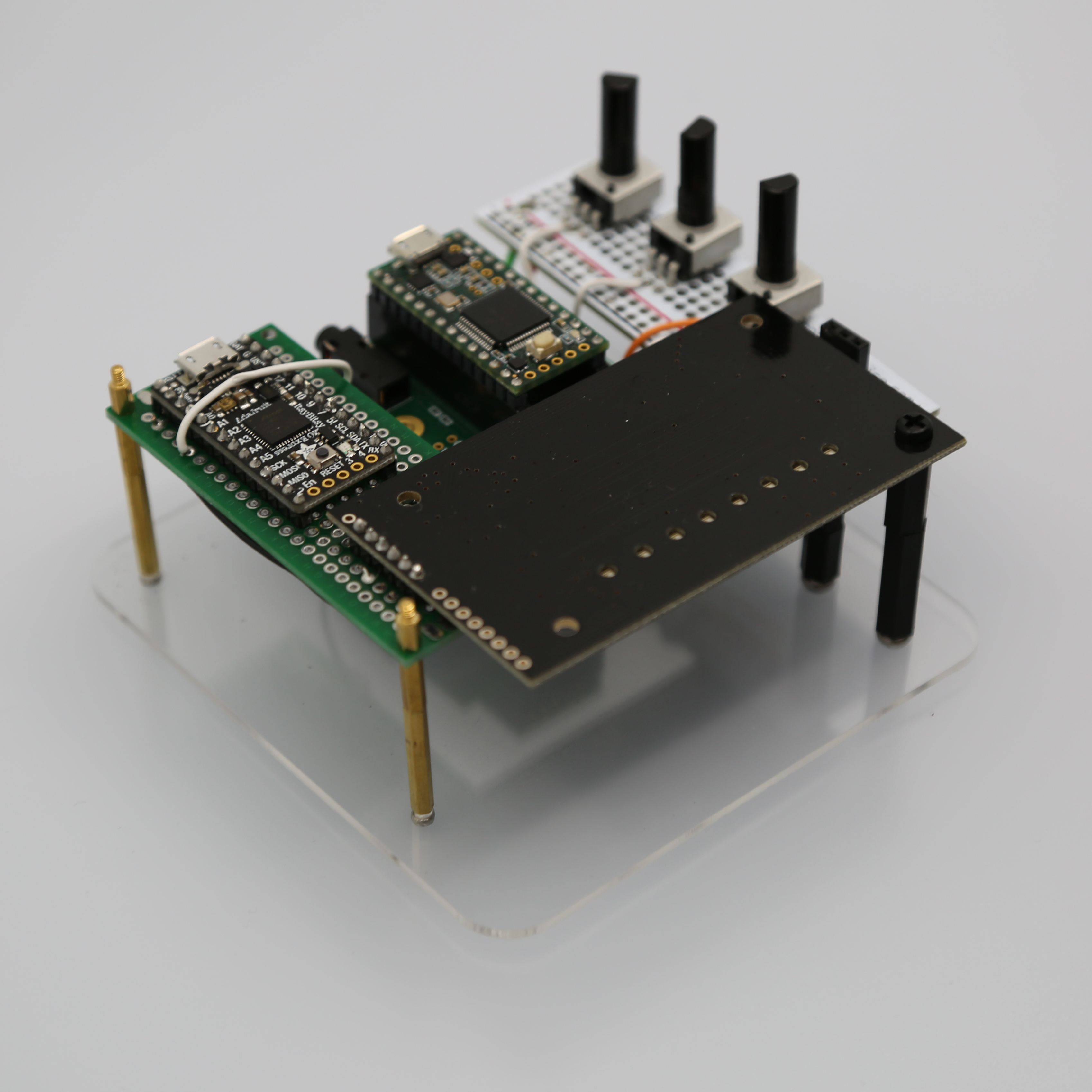

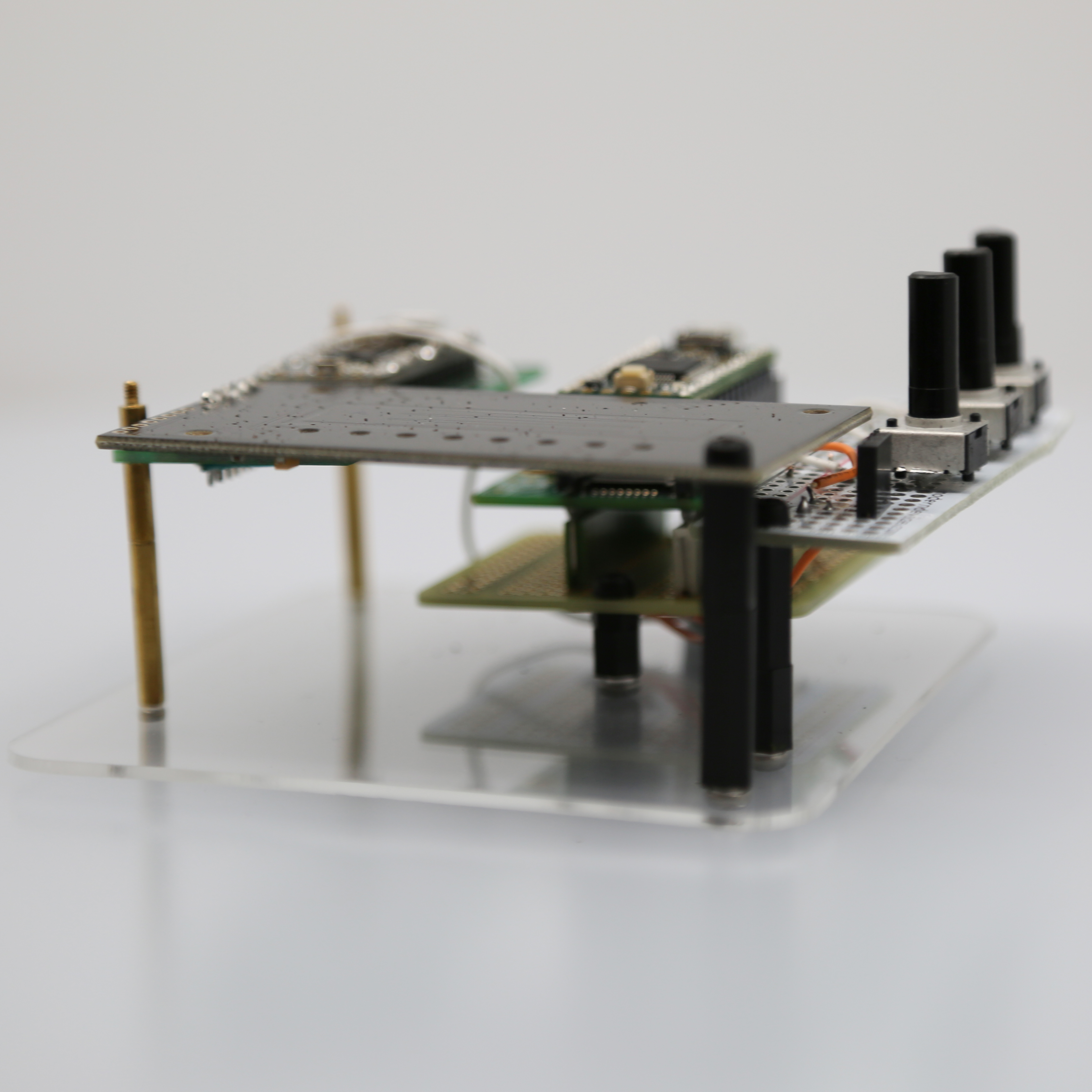

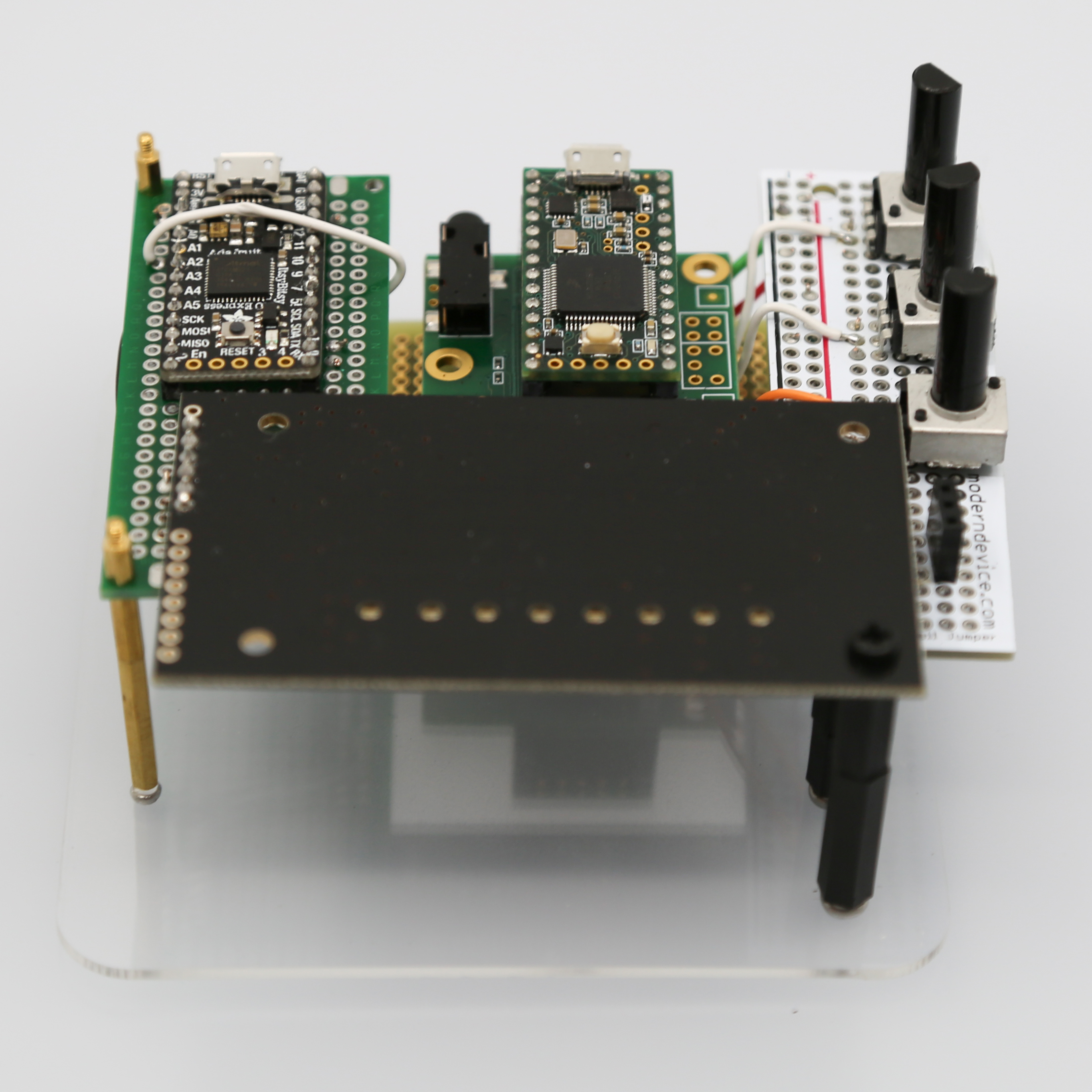

To create the instrument, I connected several analog inputs to a Teensy microcontroller. The Teensy is attached to the Teensy Audio Shield, a lovely board that allows it to make music. The company that makes these also has a library for creating audio synthesis systems in Arduino that works with an online interface, the PJRC Audio System Design Tool. Using these, I was able to create a complex network of sound creation. All inputs for human touch and plant soil interact with one another. When one wire is touched, it changes multiple parts of the system rather than just one. The result is a device where plants and I can talk to one another.

surrounding sound is programmed in Supercollider and Arduino using two microcontrollers. It does not need to be connected to a computer to be used, as it is meant to be taken outdoors to listen to environments. It has been shown in both performance and installation, paired with generative audiovisual projections that present the locations it recorded. The performance visual, an excerpt of which is on the right, distorts images and videos of these locations according to the volume of sound from the performer, the instrument, and a field recording of the ambient noise from one site. The installation visual uses a 3-dimensional space to take viewers to the different sites and their sounds. The sound of any particular site is loudest at the center of its image cube, and silent once the camera is too far from it.

Creating mutual understanding begins with communication. surrounding sound seeks to facilitate a conversation with the weather.

zither sin string is an electronic instrument driven by gestures. It uses two ultrasonic distance sensors and an Adafruit APDS9960 breakout board, used for gesture tracking. The gestures left, right, up, and down are used to control the output of single notes or chords, while the distances between the performer's hands and the instrument control pitch. I designed a Karplus Strong-based synthesis engine in Max to play notes, but the instrument can also be used as a MIDI controller.

The following video documents a performance with zither sin string.

This installation collects my MFA thesis of the same name into a hybrid performance-exhibition space.

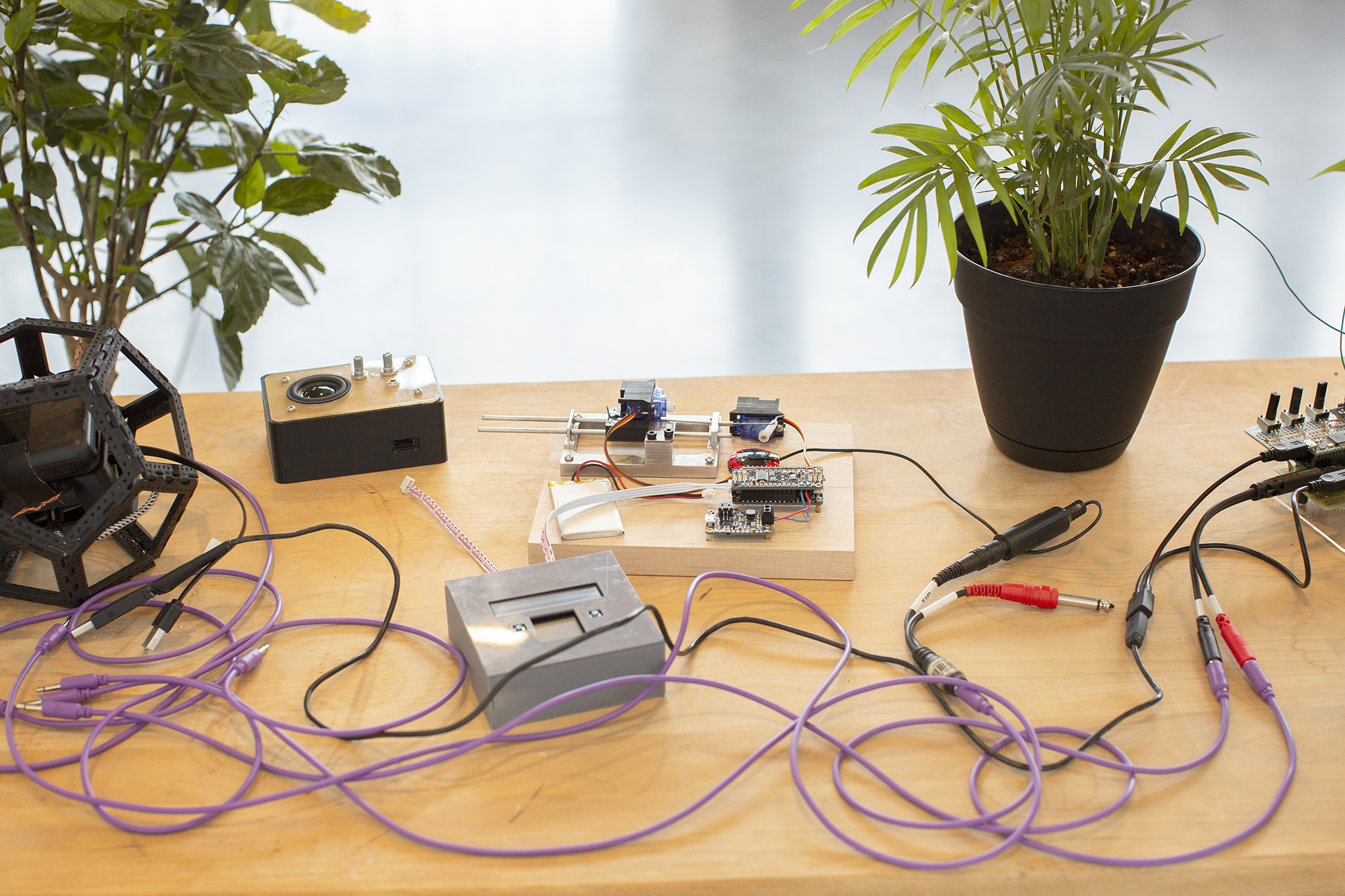

Throughout the exhibition, the five speakers each played one channel of audio corresponding to one track from the thesis album. The audio is played at a very low volume so that visitors must approach each speaker individually. The instruments created during the course of my degree are presented on the table alongside plants they are communicating with. Every other day, the three speakers in the back are turned off and the two side speakers are turned to face outward for a short live performance demonstrating the instruments.

Instruments featured: weather events, weather invents, surrounding sound, earth and touch

The online exhibition page can be viewed here.

Documentation courtesy of Steffaine Padilla.

untitled arctic poem uses real-time generative audio and video to convey the intertwining of historical and contemporary factors contributing to the changing ecology of the arctic circle. Public domain visuals and audio are spliced, layered, and edited by the program at random. A dome speaker was used in the installation so that viewers hear the audio only when standing under it.

This interactive installation uses motion tracking to manipulate videos. A generative system splices and organizes the clips, while the position of viewers changes the visual processing. Programmed in Max/MSP for Kinect using KiCASS.

Programmers: Ollie Rosario, Katie Lee, Cindy Chow, Ramsey Sadaka

Emerald was composed collaboratively in response to a task to create a cohesive piece by programming novel controllers. This is a rehearsal video of the outcome of the exercise. I played an instrument I built, zither sin string

Programmers: Ollie Rosario, Ramsey Sakada

Choreographer, Dancer: Gemma Tomasky

This piece, for dancer and virtual cellist, explores the interaction between the natural and the electronic. The GameTrak controls used by the dancer and the cellist control the output of sound. At their most extreme, they also distort the organic sounds and alter the cello's timbre.

Programmers: Ollie Rosario, Chantelle Ko, Elizabeth Feng

Violin: Chantelle Ko

Fire and Ice discusses the relationships between extremes. The two contrasting elements are explored through a range of their manifestations: volcanic eruptions, ice floes shifting, houses burning, and more all can be heard in the piece. We progress through their various similar and dissimilar sounds and forces, keeping their potential for destruction in mind.

Programmers: Ollie Rosario, Micki Lee-Smith

Choreographer, Dancer: Gemma Tomasky

The Bells is a piece inspired by Edgar Allan Poe's poem of the same name. Our interpretation of the change in tone through the poem gave our piece a narrative of instability. The character moves through zones that are increasingly chaotic and dangerous, becoming more scared and confused, before finally being caught in the center. The sound samples used in each zone are meant to reflect this, and the themes of loss and madness.